|

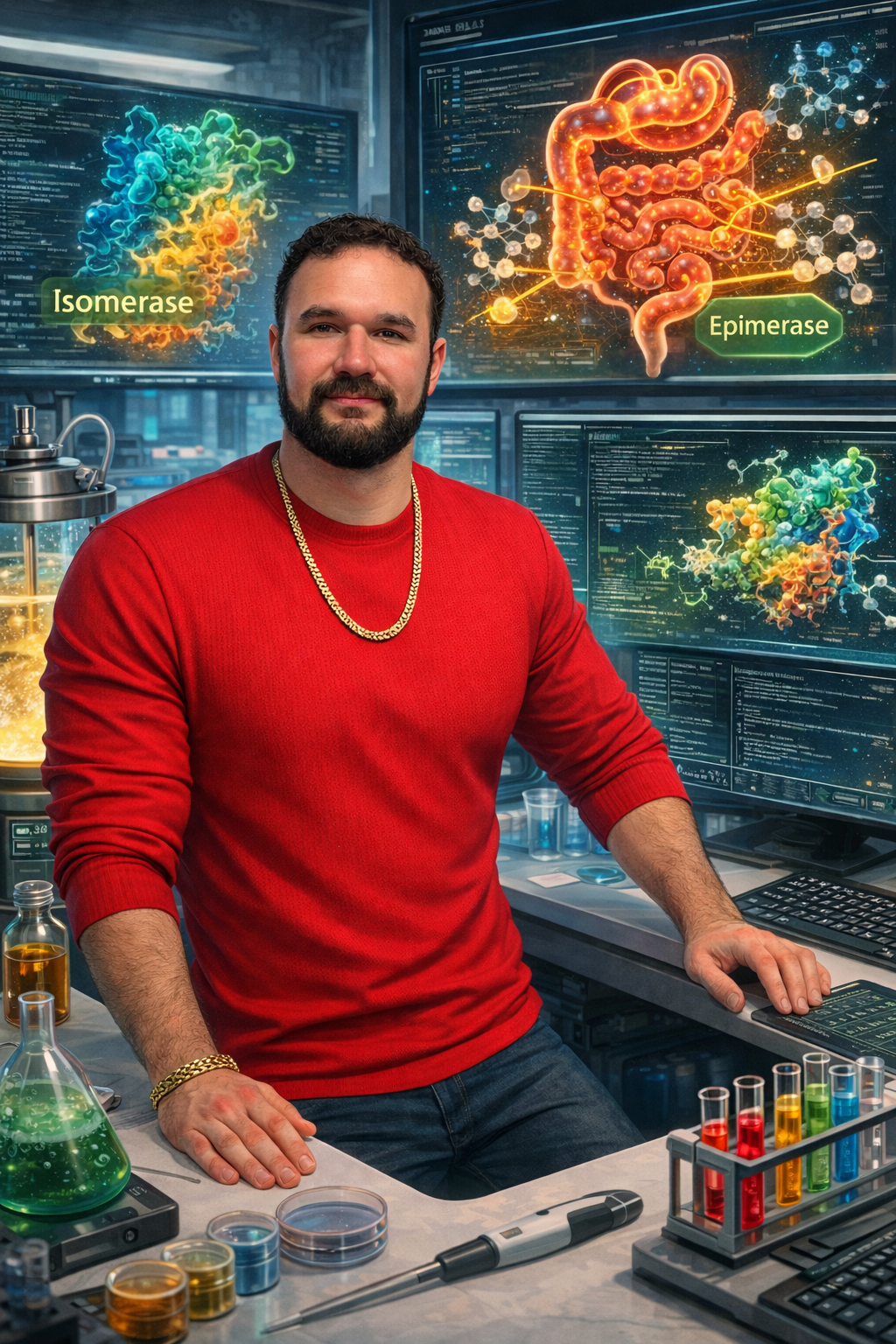

Danny Jesus Diaz, PhD |

|

TLDRI build AI systems that design and optimize novel proteins for real-world impact. My interests span cancer therapeutics, microplastic bioremediation, biosensors and diagnostics, sustainable biomanufacturing of chemicals and medicines, and, most recently, the reprogramming of human metabolism to prevent chronic diseases. When I'm not writing code or engineering proteins, I'm probably traveling to a conference, a mountain, or the beach. I enjoy 3D printing (usually molecules 😅), reading and learning about new topics, snowboarding, surfing, and spending time with the people who matter most to me. My faith in Jesus is a big part of who I am — it keeps me grounded knowing no weapon formed against me shall prosper, and gives me a deep sense of purpose as I navigate the ups and downs of commercializing AI-engineered proteins. #blessed |

|

EducationI received my PhD in Chemistry from the University of Texas at Austin, where I trained under Dr. Andrew Ellington and Dr. Eric Anslyn. During my doctoral work, I collaborated closely with both experimental protein engineers and computer scientists to design datasets, deep learning architectures, and training strategies that move from computer model to experimental biomolecule. I led development of MutCompute, a structure-guided machine learning platform for protein engineering that significantly accelerated the optimization of proteins across a variety of applications. To summarize, we improved the activity and stability of two plastic eating enzymes, polymerases for covid19 diagnostics, a therapeutic enzyme undergoing preclinical trials for breast cancer, and various biocatalysts for pharmaceutical and chemical biomanufacturing, including two ene-reductase photoenzymes. See publications below. What I am currently up toI am a computational protein engineer and Research Scientist at UT Austin Computer Science (UTCS) and the Institute for Foundations of Machine Learning (IFML), where I lead the Deep Proteins Group. My team works at the intersection of artificial intelligence and biomolecular engineering. We develop sequence- and structure-based machine learning frameworks that translate directly into functional proteins — accelerating therapeutic development of drugs and industrial biomanufacturing of chemical and drugs. I consult biotech and pharmaceutical companies through Intelligent Proteins, LLC, helping them leverage ML-driven protein engineering workflows in their R&D and commercial pipelines. In 2025, I founded a stealth biotechnology startup focused on reprogramming human metabolism using AI-engineered enzymes to prevent chronic metabolic disease. We have raised $2M in pre-seed funding and are actively building our flagship product. Keeping it low key till summer 2026. If you are interested in investing, feel free to reach out.

Personal Email /

Consulting Email /

Investment Opportunities

|

|

Media

|

Patents |

|

Mutations for improving activity and thermostability of petase enzymes

Hongyuan Lu, Daniel J Diaz, Hannah Cole, Raghav Shroff, Andrew D Ellington, Hal Alper WO Patent WO2022076380A2 Computational redesign of PETase to breakdown water bottle and food container plastic. The N233K mutation—discovered through ML optimization—eliminates calcium dependence while boosting both activity and thermostability, enabling practical degradation of post-consumer plastic at scale. |

|

Leaf-branch compost cutinase mutants

Hongyuan Lu, Daniel J Diaz, Andrew D Ellington, Hal Alper WO Patent WO2023154690A2 ML-engineered plastic-degrading Cutinase with practical performance improvements. Leverages the same calcium-elimination strategy (N233K) proven in PETase, enabling robust enzyme function without costly cofactor requirements. |

|

Engineered human serine dehydratase enzymes and methods for treating cancer

Everett Stone, Ebru Cayir, Daniel J Diaz, Raghav Shroff WO Patent WO2024006973A1 ML-engineered human serine dehydratase variants for luminal breast cancer therapy. These designs enhance enzyme function against therapeutic targets in preclinical trials, demonstrating direct clinical application of AI-driven protein engineering. |

|

Recombinant proteins with increased solubility and stability

Andrew Ellington, Inyup Paik, Andre Maranhao, Sanchita Bhadra, David Walker, Daniel J Diaz, Ngo Phuoc US Patent US20240011000A1 ML-optimized Bst DNA Polymerase variants with superior solubility and stability for isothermal COVID-19 diagnostics. Enables rapid, on-site viral testing without the need of a thermocycler, which is often not available in remote and resource-limited settings. |

|

Methods and compositions related to modified methyltransferases and engineered biosensors

Andrew Ellington, Daniel J Diaz, Simon d'Oelsnitz US Patent App. 63/493,065 ML-engineered Norbelladine methyltransferase variants for efficient biomanufacturing of Galantamine—a first-line Alzheimer's therapeutic. First example of context-aware ML protein design conditioning on active-site ligand and cofactor geometry, which were computationally modeled, unlocking precision AI enzyme engineering for pharmaceutical and chemical commodity biomanufacturing. |

Research PublicationsSelected publications and patents are listed below. For a comprehensive list of my publications, please visit my Google Scholar profile. Representative papers are highlighted. |

Machine Learning Papers |

|

Triangle Multiplication is All You Need for Biomolecular Structure Representations

Jeffrey Ouyang-Zhang*, Pranav Murugan, Daniel J Diaz, Gianluca Scarpellini, Richard Strong Bowen, Nate Gruver, Adam Klivans, Philipp Krähenbühl, Aleksandra Faust, Maruan Al-Shedivat International Conference on Learning Representations (ICLR), 2026 We introduce Pairmixer, a streamlined alternative to the Pairformer backbone used in AlphaFold3-style models, demonstrating that triangle multiplication alone is sufficient for high-quality biomolecular structure prediction. By eliminating triangle attention and sequence updates while preserving higher-order geometric reasoning, Pairmixer matches state-of-the-art folding and docking performance while reducing training cost by 34% and achieving up to 4× faster inference on long sequences. This architectural simplification substantially expands the scalability of structure prediction for large protein complexes, high-throughput ligand screening, and iterative binder design. |

|

Vector Virtual Screen: Scalable Optimization for Ultra-Large Combinatorial Libraries

Karl Heyer V, David Yang, Daniel J Diaz BioRxiv, 2025 We introduce Vector Virtual Screen (VVS), a score-function-agnostic machine learning framework for efficiently navigating ultra-large combinatorial search spaces in virtual screening. VVS integrates arbitrary scoring functions with scalable optimization strategies to explore synthesis-on-demand libraries at previously intractable scale, achieving orders-of-magnitude improvements in search efficiency while maintaining high hit quality. This work substantially lowers the computational barrier to large-scale molecular discovery and enables practical exploration of chemical spaces far beyond traditional screening limits. |

|

Generating functional and multistate proteins with a multimodal diffusion transformer

Bowen Jing*, Anna Sappington*, Mihir Bafna*, Ravi Shah, Adrina Tang, Rohith Krishna, Adam Klivans, Daniel J Diaz, Bonnie Berger bioRxiv, 2025 We present ProDiT, a multimodal diffusion transformer that jointly models protein sequence and 3D structure to enable function-aware protein generation at scale. Trained on sequences, structures, and annotations spanning 214M proteins, ProDiT can be conditioned on hundreds of molecular functions (Gene Ontology terms) while preserving key active- and binding-site motifs. We further introduce a diffusion-based protocol for designing multistate proteins, demonstrating allosterically controllable enzymes by scaffolding catalytic sites that can be deactivated by a calcium effector—unlocking design specifications difficult for prior sequence-only or structure-only generative models. |

|

Ambient Proteins: Training Diffusion Models on Low-Quality Structures

Giannis Daras*, Jeffrey Ouyang-Zhang*, Krithika Ravishankar, Costis Daskalakis, Adam Klivans, Daniel J Diaz NeurIPS, 2025 We introduce Ambient Protein Diffusion, a framework that enables protein generative models to learn from low-confidence AlphaFold structures rather than discarding them. By modeling prediction errors as structured corruption and adapting the diffusion objective accordingly, our method leverages the full AlphaFold Database while preserving structural fidelity. With only 16.7M parameters, Ambient Protein Diffusion surpasses prior 200M-parameter models, achieving state-of-the-art diversity, designability, and novelty—improving diversity from 45% to 86% and designability from 68% to 86% on long proteins (700 residues). This work substantially expands the scalable generation of long, complex, and novel protein backbones. |

|

Distilling structural representations into protein sequence models

Jeffrey Ouyang-Zhang, Chengyue Gong, Yue Zhao, Philipp Krähenbühl, Adam R Klivans, Daniel J Diaz International Conference on Learning Representations (ICLR), 2025 We present a novel method for distilling structural representations into protein sequence models, which we call structure-tuning. We first pre-train two graph-transformer on a large protein structure dataset using the EvoRank loss (MutRank) and reconstruction loss (Atomic AutoEncoder). The representations of these structure models is tokenized using K-mean clustering. The structure tokens are used to fine-tune ESM2's representations on the UniClust30 dataset, which we named Implicit Structure Model. We show that ISM's structure-enriched representation outperforms a variety of state-of-the-art sequence-based models and multi-modal models on a variety of protein structure prediction and protein engineering tasks. ISM is a drop in replacement for ESM2 and can be found here. |

|

A Systematic Evaluation of The Language-of-Viral-Escape Model Using Multiple Machine Learning Frameworks

Brent Allman, Luiz Vieira, Daniel J Diaz, Claus O Wilke, Journal of the Royal Society Interface, 2025 It is critical to rapidly identify mutations with the potential for immune escape or increased disease burden (variants of concern). A recent study proposed that viral variants-of-concern can be identified using two quantities extracted from protein language models: grammaticality and semantic change. Grammaticality is intended to be a measure of whether a viral protein variant is viable, and semantic change is intended to be a measure of the variants potential for immune escape. Here, we systematically test this hypothesis, taking advantage of several high-throughput datasets that have become available since the original study, and also evaluating additional machine learning models for calculating the grammaticality and semantic metrics. We find that grammaticality correlates with protein viability, though the more traditional metric, ΔΔG, appears to be more effective. By contrast, we do not find compelling evidence that the semantic change metric can effectively identifying immune escape mutations. |

|

Evolution-Inspired Loss Functions for Protein Representation Learning

Chengyue_Gong, Adam Klivans, James Madigan Loy, Tianlong Chen, Qiang Liu, Daniel J Diaz International Conference on Machine Learning (ICML), 2024 Current, protein representation learning methods primarily rely on BERT- or GPT-style self-supervised learning and use wildtype accuracy as the primary training/validation metric. Wildtype accuracy, however, does not align with the primary goal of protein engineering: suggest beneficial mutations rather than to identify what already appears in nature. To address this gap between the pre-training objectives and protein engineering downstream tasks, we present Evolutionary Ranking (EvoRank): a training objective that incorporates evolutionary information derived from multiple sequence alignments (MSAs) to learn protein representations specific for protein engineering applications. Across a variety of phenotypes and datasets, we demonstrate that an EvoRank pre-trained graph-transformer (MutRank) results in significant zero-shot performance improvements that are competitive with ML frameworks fine-tuned on experimental data. This is particularly important in protein engineering, where it is expensive to obtain data for fine-tuning. |

|

Stability Oracle: A Structure-Based Graph-Transformer for Identifying Stabilizing Mutations

Daniel J Diaz, Chengyue Gong, Jeffrey Ouyang-Zhang, James M Loy, Jordan Wells, David Yang, Andrew D Ellington, Alexandros G Dimakis, Adam R Klivans Nature Communications, 2024 Stability Oracle is a structure-based graph-transformer framework that is first pre-trained with BERT-style self-supervision on the MutComputeX dataset and then fine-tuned on a curated subset of the megascale cDNA-display proteolysis dataset. Here, we introduce several innovations to overcome well-known challenges in data scarcity and bias, generalization, and computation time, such as: Thermodynamic Permutations for data augmentation, structural amino acid embeddings to model a mutation with a single structure, a protein structure-specific attention-bias mechanism that makes graph transformers a viable alternative to graph neural networks. |

|

Binding Oracle: Fine-Tuning From Stability to Binding Free Energy

Chengyue Gong, Adam R Klivans, Jordan Wells, James Loy, Qiang Liu, Alexandros G Dimakis, Daniel J Diaz, NeurIPS GenBio Workshop Spotlight, 2023 Fine-tuning machine learning frameworks to a small experimental dataset is prone to overfitting. Here, we present Binding Oracle: a Graph-Transformer framework that fine-tunes Stability Oracle to ∆∆G of binding for protein-protein interfaces (PPI) via a technique we call Selective LoRA. Selective LoRA, uses the gradient norms of each layer to select the subset most sensitive to the fine-tuning dataset--here it was a curated subset of Skempi2.0 (B1816)--and then finetunes the selected layers with LoRA. By applying Selective LoRA to Stability Oracle, we are able to achieve SOTA on the S487 PPI test set and generalization between different types of PPI interfaces. |

|

Predicting a Protein’s Stability under a Million Mutations

Jeffrey Ouyang-Zhang, Daniel J Diaz, Adam R Klivans Philipp Krahenbuhl NeurIPS, 2023 The mutate everything framework allows the fine-tuning of sequence-based (ESM2) and MSA-based (AlphaFold2) protein foundation models on phenotype data with parallel decoding. Here, we demonstrate how their representations can be fine-tuned on the cDNA-display proteolysis dataset with the mutate everything framework to predict the thermodynamic impact of single point mutations (∆∆G). The AlphaFold2 fine-tuned model, StabilityFold, is able to achieve similar results to Stability Oracle on a variety test sets. More importantly, The mutate everything framework allows for parallel decoding of single and higher-order amino acid substitutions into ∆∆G predictions. This capability not only enables rapid DMS inferencing of proteins but makes double mutant DMS inferencing computationally tractable. |

|

Hotprotein: A novel framework for protein thermostability prediction and editing

Tianlong Chen, Chengyue Gong, Daniel J Diaz, Xuxi Chen, Jordan Tyler Wells, Zhangyang Wang, Andrew Ellington, Alexandros G Dimakis, Adam Klivans ICLR, 2023 We curated an organism-based temperature dataset (HotProteins) for distinguishing proteins with varying thermostability (cryophiles, psychrophiles, mesophiles, thermophiles, and hyperthermophiles). We proposed structure-aware pretraining (SAP) and factorized sparse tuning (FST) to fine-tune the sequence-based transformer, ESM-1b, representations to generate a classifier and regressor to predict a protein's organism class or growth temperature. |

|

Two sequence- and two structure-based ML models have learned different aspects of protein

biochemistry

Anastasiya V Kulikova, Daniel J Diaz, Tianlong Chen, Jeffrey Cole, Andrew D Ellington, Claus O Wilke Scientific Reports, 2023 We compare and contrast self-supervised sequence-based transformers and structure-based 3DCNNs models. We find that there is a variance-bias tradeoff between the two protein modalities. Convolutions provide an inductive bias for protein structures where the more powerful sequence-based transformers demonstrate increase variance. |

|

Learning the Local Landscape of Protein Structures with Convolutional Neural Networks

Anastasiya V Kulikova, Daniel J Diaz, James M Loy, Andrew D Ellington, Claus O Wilke Journal of Biological Physics, 2021 We compare how self-supervised 3DCNNs learn the local mutational landscape of proteins against evolution via Multiple Sequence Alignments. We find that structure-based 3DCNNs amino acid likelihoods have weak correlation with MSAs and their wildtype confidence is dependent on the structural position of the residue. Where core residues being more confidently predicted. |

Protein Papers |

|

Machine learning-guided engineering of T7 RNA polymerase and mRNA capping enzymes for enhanced gene expression in eukaryotic systems

Seung-Gyun Woo, Danny J. Diaz, Wantae Kim, Mason Galliver, Andrew D. Ellington Chemical Engineering Journal, 2025 We present an ML-guided strategy to engineer a high-performance eukaryotic transcription platform by co-optimizing both T7 RNA polymerase and a fused viral mRNA capping enzyme, rather than treating each component independently. We integrate complementary structure-based protein engineering frameworks—self-supervised (MutCompute/MutComputeX), evolution-aware fine-tuned (MutRank), and stability fine-tuned (Stability Oracle)—to design mutations using both crystallographic and AlphaFold2 structural models. Variants were screened in yeast, where we identified additive substitutions that yield >10× improvements in gene expression and can be further stacked with previous variants obtained from directed-evolution experiments to achieve ~13× gains. The resulting fusion enzymes expand the practicality of orthogonal, high-efficiency gene expression systems for synthetic biology, metabolic engineering, and mRNA production. |

|

Engineering a photoenzyme to use red light

Jose M. Carceller, Bhumika Jayee, Claire G. Page, Daniel G. Oblinsky, Gustavo Mondragón-Solórzano, Nithin Chintala, Jingzhe Cao, Zayed Alassad, Zheyu Zhang, Nathaniel White, Daniel J. Diaz, Andrew D. Ellington, Gregory D. Scholes, Sijia S. Dong, Todd K. Hyster Cell Chem, 2024 Previously, we engineered a ene-reductase (ERED) photoenzyme capable of asymmetric synthesis of α-chloroamides under blue light using directed evolution and machine learning-guided protein engineering with MutComputeX. Here, we conduct allosteric tuning of the electronic structure of the cofactor-substrate complex using directed evolution and MutComputeX to dramatically increase catalysis under red light (99% yields). Computational studies show a different electron transition for cyan and red light and how mutations at the protein surface allosterically tune the active site complex. |

|

Biosensor and machine learning-aided engineering of an amaryllidaceae enzyme

Simon d'Oelsnitz, Daniel J Diaz, Daniel J Acosta, Tyler L Dangerfield, Mason W Schechter, Matthew B Minus, James R Howard, Hannah Do, James Loy, Hal Alper, Andrew D Ellington Nature Communications, 2024 We engineered a transcription factor and a methyl transferase to improve the regioselectivity and titer yield for production of 4O-methyl-norbelladine. To engineer the 4O-methyltransferase enzyme, we developed MutComputeX: a self-supervised 3DResNet trained to generalize to protein-ligand, -nucleotide, and -protein interfaces. MutComputeX was used to design mutations on a computational ternary structure of the AlphaFolded methyl-transferase with SAH and norbelladine docked with Gnina. This is the first time three machine learning models (AlphaFold, Gnina, MutComputeX) were synergized to engineer the surface and active site of an enzyme and combined with an engineered transcription factor for high-throughput screening. |

|

Asymmetric Synthesis of α-Chloroamides via Photoenzymatic Hydroalkylation of Olefins

Yi Liu, Sophie G Bender, Damien Sorigue, Daniel J Diaz, Andrew D Ellington, Greg Mann, Simon Allmendinger, Todd K Hyster Journal of the American Chemical Society, 2024 We engineered a Ene-reductase photoenzyme capable of asymmetric synthesis of α-chloroamides under blue light. MutComputeX was used to identify several mutation that improved the activity and stereoselectivity observed under blue light. |

|

Machine learning-aided engineering of hydrolases for PET depolymerization

Hongyuan Lu, Daniel J Diaz, Natalie J Czarnecki, Congzhi Zhu, Wantae Kim, Raghav Shroff, Daniel J Acosta, Bradley R Alexander, Hannah O Cole, Yan Zhang, Nathaniel A Lynd, Andrew D Ellington, Hal S Alper Nature, 2022 We utilized MutCompute to guide the engineering of a mesophilic and thermophilic PET hydrolases. We examined the ability of the mesophilic PET hydrolase (FAST-PETase) to depolymerize post-consumer PET waste. FAST-PETase was capable of depolymerizing ~50 post-consumer PET waste within 2-4 days. Furthermore, the ML designs increased the depolymerization capacity of the thermophilic PET hydrolase (ICCM) by 100%. Here is a time-lapse video of the depolymerization of a full PET container from Walmart. |

|

Improved Bst DNA Polymerase Variants Derived via a Machine Learning Approach

Inyup Paik, Phuoc HT Ngo, Raghav Shroff, Daniel J Diaz, Andre C Maranhao, David JF Walker, Sanchita Bhadra, Andrew D Ellington Biochemistry, 2021 Bst Polymerase was stabilized via ML-guided protein engineering in order to shorten the diagnostic time of LAMP-OSD assays during the height of the COVID19 pandemic. LAMP-OSD is an isothermal DNA amplification technique that enables field diagnostic of COVID19 in poor resource settings. |

|

GroovDB: A database of ligand-inducible transcription factors

Simon d’Oelsnitz, Joshua D Love, Daniel J Diaz, Andrew D Ellington ACS SynBio, 2022 A database for ligand-induced transcription factor. These transcription factor serve as starting points for high-throughput screening genetic biosensors. |

|

Discovery of novel gain-of-function mutations guided by structure-based deep learning

Raghav Shroff, Austin W Cole, Daniel J Diaz, Barrett R Morrow, Isaac Donnell, Ankur Annapareddy, Jimmy Gollihar, Andrew D Ellington, Ross Thyer ACS SynBio, 2020 The development and initial experimental validation of the MutCompute framework: a self-supervised 3DCNN trained on the local chemistry surrounding an amino acid. The model was experimentally characterized for its ability to identify residues where the wildtype amino acid is incongruent for its surrounding chemical environment (protein only) and primed for gain-of-function. Here, BFP, phosphomannose isomerase, and beta-lactamase were engineered via machine learning. |

Reviews |

|

Using machine learning to predict the effects and consequences of mutations in proteins

Daniel J Diaz, Anastasiya V Kulikova, Andrew D Ellington, Claus O Wilke Current Opinion in Structural Biology, 2023 A review on the state-of-the-art machine learning frameworks (as of July 2022) for characterizing the functional and stability effects of point mutations on proteins. |

|

Pushing Differential Sensing Further: The Next Steps in Design and Analysis of Bio‐Inspired

Cross‐Reactive Arrays

Hazel A Fargher, Simon d'Oelsnitz, Daniel J Diaz, Eric V Anslyn Analysis & Sensing, 2023 A perspective on the future technology developments of differential sensing. |

|

Website source code was taken and modified from Jon Barron's website. |